Running Lighthouse in GitLab CI with Metrics Report

Google Chrome's Lighthouse tool is a great resource in the browser and has become the standard for basic performance and best-practice metrics on websites. While useful in the browser, a good continuous integration (CI) pipeline includes all the testing practical to identify any issues as early as possible. To that end, this post details how to run Lighthouse via the CLI in GitLab CI and collect a GitLab metrics report so any changes are reported in merge requests.

Lighthouse versus Lighthouse CI #

Lighthouse can be used to analyze a single URL and provide a report with the results, similar to those obtained when running it in a browser. Lighthouse CI provides a wrapper around Lighthouse to be able to run analyses against multiple URLs, run with multiple retries for each page, set specific thresholds to fail the tests, track metrics for a URL over time, and more. This also requires uploading the results to a Lighthouse CI server (at least to track results over time).

The goals for this example, which is based on analysis of a static site (like this one), are to:

- Run Lighthouse against a representative page to identify changes and provide an HTML report to view the detailed results. This should be done for both a mobile and desktop browser configuration.

- Make as much data as practical available through the GitLab merge request UI. This is intended to reinforce the existing philosophy in GitLab using merge request widgets as the portal to all merge request related data, and minimize the cases where you have to navigate elsewhere to view data.

Given those goals, Lighthouse is used in this example. In general, Lighthouse CI could be used instead, but requires some changes to CLI commands, configuration files, and a Lighthouse CI server (which is then another place to go to view results). See the Lighthouse CI documentation for additional details.

Lighthouse container image #

The desire in this case, as with most GitLab CI jobs, is to run Lighthouse in a container (for so many reason it needs to be an post unto itself). While Lighthouse and Lighthouse CI are both available as npm packages which can be run from the CLI, they do not install a version of Chromium, unlike some other Chromium-based analysis tools, instead requiring it to be installed on the platform (that is, in the container).

While a GitLab CI job could be setup to install all required dependencies on each execution, this can be a lengthy process, so to reduce pipeline execution times a dedicated container with all dependencies pre-installed is preferred. Since there were none easily found with the desired capabilities, I created a new Lighthouse container image project to build and publish an image. This image is based on the Node 18 Debian Bullseye Slim image with the following dependencies installed:

chromium: The latest Chromium release for Debian Bullseye.lighthouse: The npm package for running Lighthouse via the CLI.@lhci/cli: The npm package for running Lighthouse CI via the CLI.serve: A CLI-based static web server.wait-on: A CLI-based tool to wait for a web server (or other resources) to become available.

This image also runs by default as a non-privileged user, which is not only a

general best-practice, running as root requires running Chromium without a

sandbox (that is, with the --no-sandbox argument). While running in a

container itself minimizes the risk of running without a sandbox, it's certainly

desirable to have when possible.

The container images for this project are hosted on gitlab.com in the

project's container registry

as registry.gitlab.com/gitlab-ci-utils/lighthouse. The latest tag is

recommended, but others are available. See the

project documentation for

additional details on the project and the full

Dockerfile,

if interested.

Running Lighthouse #

To run Lighthouse, the previously detailed container image is used for the CI

job. The needs property should be

set to any job or jobs required to build the site to be tested, which then

trigger the Lighthouse job as soon as any required artifacts are available. As

noted previously, a goal is to run Lighthouse in both a mobile and desktop

configuration, so a variable

is set to specify the form factor, which is used in the job script and when

generating the metrics report.

lighthouse_mobile:

image: registry.gitlab.com/gitlab-ci-utils/lighthouse:latest

stage: test

needs:

- job_that_builds_site

variables:

FORM_FACTOR: 'mobile'

...The page to be tested is served by a static server running in the container.

This is run in

before_script to optimize

the ability to re-use this job across projects. If it is used for a different

web application needing a different server, only the before_script has to be

overridden, and only with the script to start the server. In this case serve

is used since it is installed in the container image, and it is passed the

directory containing the site. The output from serve, both stdout and stderr,

are piped to null to avoid polluting the job log with

> /dev/null 2>&1.

Finally wait-on in called with the URL to be tested, which causes the script

to wait until the server is running and successfully serves the page.

lighthouse_mobile:

...

before_script:

- serve ./site_folder/ > /dev/null 2>&1 & wait-on http://localhost:3000/

...The script runs lighthouse,

formatted as a

multi-line block

for readability, and passes the URL to be tested and the following test options

as CLI arguments:

- Run in a headless browser.

- Test only a subset of the Lighthouse categories.

- Output both a JSON and HTML report. The HTML report provides a more convenient way to view the full results. The JSON report is used to extract the scores to a metrics report.

- Specify the root name of the reports via

--output-path, using theFORM_FACTORvariable declared previously. This is appended with ".report.<format>", so the final JSON report name, for example, islighthouse-mobile.report.json. - The

$LIGHTHOUSE_ARGUMENTSvariable is included to allow for adding CLI arguments without having to override the script (although in this job it is empty).

If you're running this on a gitlab.com shared runner, the

--no-sandboxargument should not be required. If this is run on a different runner, or sandbox errors are encountered, the--chrome-flagsargument can be updated to--chrome-flags="--headless --no-sandbox".

lighthouse_mobile:

...

script:

- >

lighthouse http://localhost:3000/

--chrome-flags="--headless"

--only-categories="accessibility,best-practices,performance,seo"

--output="json,html"

--output-path="lighthouse-$FORM_FACTOR"

$LIGHTHOUSE_ARGUMENTS

...If desired, some of this configuration could be specified via a Lighthouse

configuration file.

Since that can be defined as a JavaScript module, the variable FORM_FACTOR

would be available there as well to update the configuration.

Lastly, the HTML and JSON Lighthouse reports are saved as

artifacts. In addition,

when: always is set to

ensure that any artifacts are saved even on job failure.

Putting all of that together results in the following job to run Lighthouse testing against a mobile configuration.

lighthouse_mobile:

image: registry.gitlab.com/gitlab-ci-utils/lighthouse:latest

stage: test

needs:

- job_that_builds_site

variables:

FORM_FACTOR: 'mobile'

before_script:

- serve ./site_folder/ > /dev/null 2>&1 & wait-on http://localhost:3000/

script:

- >

lighthouse http://localhost:3000

--chrome-flags="--headless"

--only-categories="accessibility,best-practices,performance,seo"

--output="json,html"

--output-path="lighthouse-$FORM_FACTOR"

$LIGHTHOUSE_ARGUMENTS

artifacts:

when: always

paths:

- lighthouse-$FORM_FACTOR.report.html

- lighthouse-$FORM_FACTOR.report.jsonTo run Lighthouse testing against a desktop configuration, the previous

lighthouse_mobile job can be

extended, and minimal additional

overrides are required based on the that job's definition.

- Change the

FORM_FACTORvariable to update the report names. - Add the

--preset=desktopCLI argument via theLIGHTHOUSE_ARGUMENTSvariable, which updates multiple Lighthouse settings (for example--form-factor,--screenEmulation).

lighthouse_desktop:

extends:

- lighthouse_mobile

variables:

LIGHTHOUSE_ARGUMENTS: '--preset=desktop'

FORM_FACTOR: 'desktop'Exposing artifacts in merge requests #

GitLab provides the capability to specify job artifacts that are exposed with

links directly in the merge request UI, which avoids having to go to the job to

view those artifacts. This is accomplished by adding the

expose_as property

to the job artifacts with the name to display with the artifacts.

Unfortunately, there's an open

issue in GitLab where the

expose_as capability does not work when there are variables in the

artifacts:paths names,

which has been used previously. In this case the artifacts are properly saved,

but they're not exposed in the merge request UI. So, to have the artifacts

exposed the FORM_FACTOR variable needs to be backed out of artifacts:paths

and updated to list the complete names. This has to be repeated in both jobs

since the FORM_FACTOR variable is different in each case to uniquely identify

the reports. Note that for the lighthouse_desktop job only the

artifacts:paths must be specified since the other values are still inherited

from the lighthouse_mobile job.

lighthouse_mobile:

...

artifacts:

when: always

expose_as: 'Lighthouse Report'

paths:

- lighthouse-mobile.report.html

- lighthouse-mobile.report.json

lighthouse_desktop:

...

artifacts:

paths:

- lighthouse-desktop.report.html

- lighthouse-desktop.report.jsonWith these changes made, once the CI pipeline is complete, the Lighthouse report artifacts are exposed in the merge request UI as shown in the screenshot below. Note that the individual jobs are listed as well to identify the source.

Generating a metrics report #

Note that metrics reports are a GitLab Premium feature and not available in GitLab Free. If you're using GitLab for open source work see the GitLab Open Source Program for details on how to register to get free GitLab Ultimate licenses for those projects to unlock this feature and many others.

The last step is to generate a GitLab

metrics report for

the results. A metrics report is simply a file of key/value pairs, each on a new

line, conforming to the OpenMetrics format. GitLab

ingests the report and identifies any changes in the merge request UI - new

metrics, removed metrics, or metrics with changed values. As with all merge

request reporting, GitLab reports changes from the source branch in that merge

request, which allows easy identification when any of the scores change. In this

case the goal is to have metrics for the score in each Lighthouse category and

form factor being analyzed, so the metric keys take the form

lighthouse{category="performance",form_factor="mobile"}.

The results are taken from the Lighthouse JSON report. There's a lot of data in

the JSON report, the full details of which are beyond the scope of this post,

but the desired data in this case is summarized below. At the root level there's

a categories object, which has keys for each category, each of which is an

object with a score property that is a value between 0 and 1.

{

...

"categories": {

"performance": {

...

"score": 0.99

},

"accessibility": {

...

"score": 1

},

"best-practices": {

...

"score": 1

},

"seo": {

...

"score": 1

}

},

...

}To generate the metrics report add the JavaScript file lighthouse-metrics.js

to the project. In this case JavaScript is used for simplicity since this is run

a Node.js container already. This module uses the FORM_FACTOR environment

variable defined in the job to determine the results file, opens the file and

parses the JSON results, creates an array of formatted metrics with scores from

0 to 100, then joins them together, one on each line, and finally saves the

resulting metrics file.

Optional chaining

is used when retrieving the metrics so that any individual missing metric do not

raise an error, it simply reports the value as NaN (since it returns

undefined multiplied by a number).

const fs = require('fs');

const formFactor = process.env.FORM_FACTOR || '';

const scoreScalingFactor = 100;

const reportFileName = `lighthouse-${formFactor}.report.json`;

const metricsFileName = 'lighthouse-metrics.txt';

const categories = ['performance', 'accessibility', 'best-practices', 'seo'];

const results = JSON.parse(fs.readFileSync(reportFileName, 'utf8'));

const metrics = categories

.map(

(category) =>

`lighthouse{category="${category}",form_factor="${formFactor}"} ${

results?.categories[category]?.score * scoreScalingFactor

}`

)

.join('\n');

fs.writeFileSync(metricsFileName, metrics);With this script available the CI job needs to be updated to run this in the

jobs. It is executed in

after_script versus

script since a failure in after_script does not fail the job, and the job

should not fail only for a metrics-related failure. This is run with the node

command since project files pulled by the GitLab runner are not given executable

permission. The CI job artifacts also needs to be updated with a

reports property for

GitLab to import the report. Note the metrics report does not need to added to

artifacts:paths since reports are ingested directly by GitLab, not saved

with other artifacts.

lighthouse_mobile:

...

after_script:

- node ./lighthouse-metrics.js

...

artifacts:

...

reports:

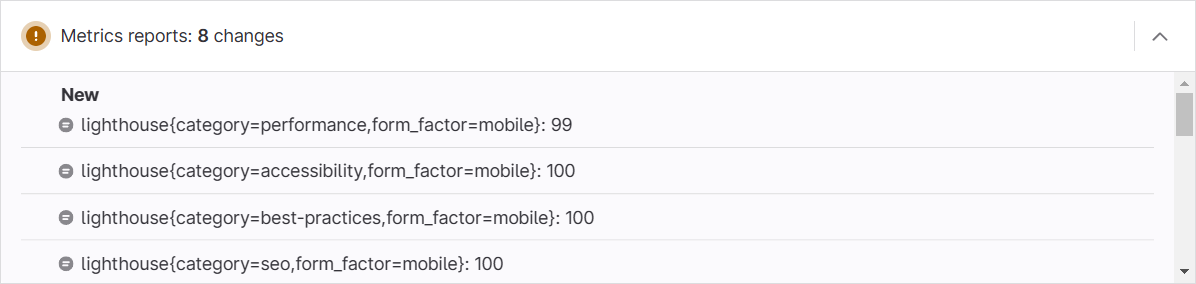

metrics: lighthouse-metrics.txtWith those updates, the first merge request to create the metrics shows results like the screenshot below, identifying the 8 new metrics (4 categories * 2 form factors) and giving the values.

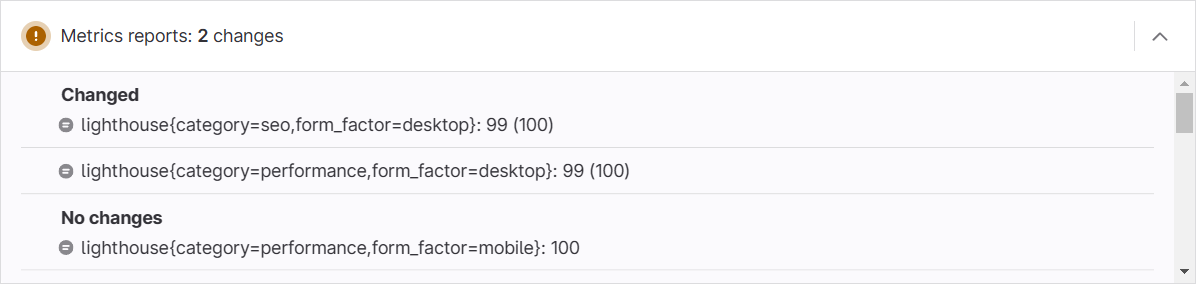

In future merge requests, any Lighthouse score changes are identified as changed values as shown in the screenshot below.

Final CI job #

Putting all of these pieces together, the final two jobs are shown below.

lighthouse_mobile:

image: registry.gitlab.com/gitlab-ci-utils/lighthouse:latest

stage: test

needs:

- job_that_builds_site

variables:

FORM_FACTOR: 'mobile'

before_script:

- serve ./site_folder/ > /dev/null 2>&1 & wait-on http://localhost:3000/

script:

- >

lighthouse http://localhost:3000/ --chrome-flags="--headless"

--only-categories="accessibility,best-practices,performance,seo"

--output="json,html" --output-path="lighthouse-$FORM_FACTOR"

$LIGHTHOUSE_ARGUMENTS

after_script:

- node ./lighthouse-metrics.js

artifacts:

when: always

expose_as: 'Lighthouse Report'

paths:

- lighthouse-mobile.report.html

- lighthouse-mobile.report.json

reports:

metrics: lighthouse-metrics.txt

lighthouse_desktop:

extends:

- lighthouse_mobile

variables:

LIGHTHOUSE_ARGUMENTS: '--preset=desktop'

FORM_FACTOR: 'desktop'

artifacts:

paths:

- lighthouse-desktop.report.html

- lighthouse-desktop.report.json

Aaron Goldenthal

Aaron Goldenthal